If there's one thing that Facebook wants everyone to know it's taking seriously, it's the topic of ethics.

The general idea of an ethical approach to everything it does -- its innovations, initiatives, and new products -- is executed with and undercurrent of ethics, user control, and privacy.

That's what CEO Mark Zuckerberg said in his opening keynote on Day 1 of F8, Facebook's annual developer conference. The new products and features unveiled yesterday, including its up-and-coming Facebook-for-dating services, was built "with privacy and safety in mind."

Today, ethics was one topic that took center stage, especially when it comes to Facebook's highly-boasted investment in AI to help combat things like hate speech and other moderation activity on the site. Here's what company executives had to say.

Ethics and AI Take Center Stage on F8 Day 2

While it's nice to know that your friends won't see if you're using Facebook's dating services and you won't be matched with them, according to yesterday's keynote, the privacy and ethical specifics weren't revealed in much data, and actually left great room for interpretation.

the dating app sounds like has some awfully handy functionality for stalkers .. why is there no one in the right place in FB to point out obvious probs like this? must be folks who can see potential problems (surely?) is it the internal comm process that's gone awry?

— eleanorina (@_eleanorina) May 2, 2018

Today, the company chose to elaborate a bit on its efforts in the "ethical" arena.

AI Ethics

When Zuckerberg appeared before Congress in April, one initiative he repeatedly emphasized throughout his testimony was the advances Facebook has made in the realm of artificial intelligence (AI) and how it can be used to combat the misuse and weaponization of the network.

But one area where it would be difficult to perfect that technology is detecting hate speech. The AI has a long way to go, he said -- though he didn't go into tremendous detail on the company's plans to improve it.

Some problems "lend themselves to AI tools more than others. Hate speech is one of the toughest. ... Contrast that with an area of something like battling terrorist propaganda," says Zuckerberg, listing the times Facebook has successfully done the latter.

— Amanda Zantal-Wiener (@Amanda_ZW) April 10, 2018

Today, Facebook Director of Machine Learning and AI Srinivas Narayanan acknowledged that, displaying cases where a machine might not understand the nuance of what might make a given sentence hateful or abusive. Using examples like, "Look at that pig" and "I'm going to beat you," he said that understanding the context of such statements requires human review.

More from Srinivas Narayanan about the nuances of hate speech and how AI isn’t enough detect context. That requires human review, he says. #F8 pic.twitter.com/kuPkUR1MYc

— Amanda Zantal-Wiener (@Amanda_ZW) May 2, 2018

The Fairness Flow

Which is where the area of focus known as AI ethics comes in, said Facebook Data Scientist Isabel Kloumann. Humans, she pointed out, have implicit biases -- using herself as an example, she said most people in the audience would deduce that she is a white female. What they might not consider, she noted, is that she's part Venezuelan, for instance.

And since machines are trained using the data given to them by humans, AI might learn to have these same biases, Kloumann said, which introduces the need for ethics and standards when it comes to building it.

That's why Facebook created the Fairness Flow, which is to help determine how much bias has been built into Facebook's algorithm, whether intentional or not. There's a need now, Kloumann said, to ensure that the company's AI is "fair and unbiased," especially since the company has come under especially intense scrutiny over what content it deems in violation of its standards.

But we have implicit biases, says Isabel Klouman, and so do the machines that learn from the data it’s trained with. So there’s a need for “AI Ethics,” and thinking about how to create “fair and unbiased AI” #F8 pic.twitter.com/npuRDmXg5l

— Amanda Zantal-Wiener (@Amanda_ZW) May 2, 2018

At the April hearings, for instance, many lawmakers accused Facebook of improperly moderating content, allowing some that could have severe impacts -- like ads for narcotics, for instance -- to remain live for far too long, while other content might be improperly deemed in violation.

Not long after Zuckerberg's testimony, The New York Times profiled the way this imbalance has manifested in grave violence in Sri Lanka.

A reconstruction of Sri Lanka’s 2017 descent into violence found that Facebook’s newsfeed played a central role in nearly every step from rumor to killing. Facebook declined to respond in detail to questions about its role in the violence. https://t.co/IaA9EGJEIy pic.twitter.com/jPkw3RcOXZ

— The New York Times (@nytimes) April 21, 2018

But Is It Enough?

But overall, Facebook has many (potentially PR-related) reasons to place the concept of ethics at the center of its messaging. The company came under fire in March when it was revealed that data analytics and voter profiling firm Cambridge Analytica -- which it was today announced will be shut down by its parent company, SCL, as well as SCL Elections Ltd. -- improperly obtained and used personal user data.

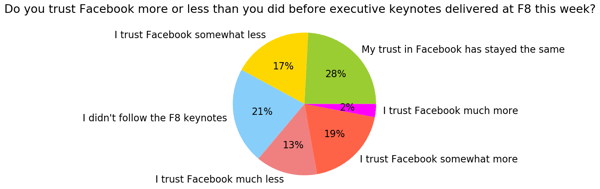

It would appear that, in addition to the questions and accusations mentioned above, people still don't entirely trust the network in terms of protecting their data and privacy. And after Zuckerberg's Congressional hearings, it seems, our survey of 300 trust in the company actually fell.

And among those who paid attention to them, the remarks made at F8 thus far had a mixed impact on overall trust in Facebook. In a HubSpot survey of 303 U.S. internet users, just under a third said they trust the company less, while roughly one-fifth of respondents said the keynotes improved their trust in the company.

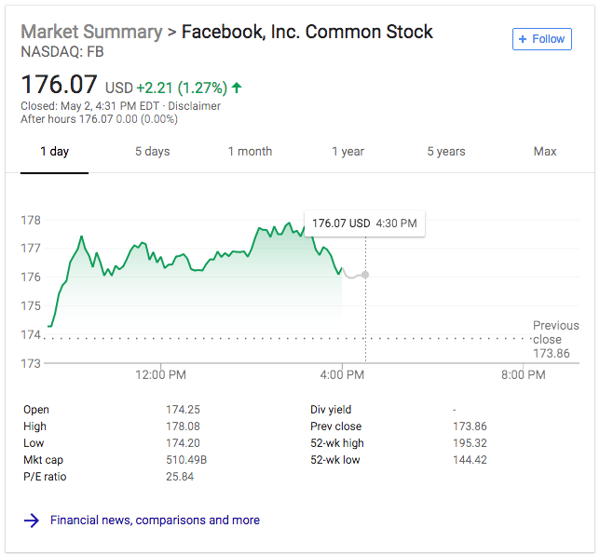

That has yet to show up financially, however -- and as of the NASDAQ closing bell today, Facebook's stock price was strong.

Source: Google

How all of this plays out in the long-term remains to be seen. But the stark contrast between last year's F8 -- when Facebook announced innovative progress in the area of reading people's minds through their skin -- and this year's event is evident.

The type of pressure and scrutiny faced by the social network continues to evolve, as well. Despite reporting strong Q1 2018 earnings, UK Parliament still demands testimony from Zuckerberg -- and recently stated that if he does not appear before members of parliament for questioning during his next visit to the UK, he shouldn't enter the region at all.

And as for the ethics -- that seems like it will be a slow, gradual road to fruition, if that's the conclusion that actually takes form.

from Marketing https://ift.tt/2I4JVqS

via IFTTT

ConversionConversion EmoticonEmoticon